Dear All,

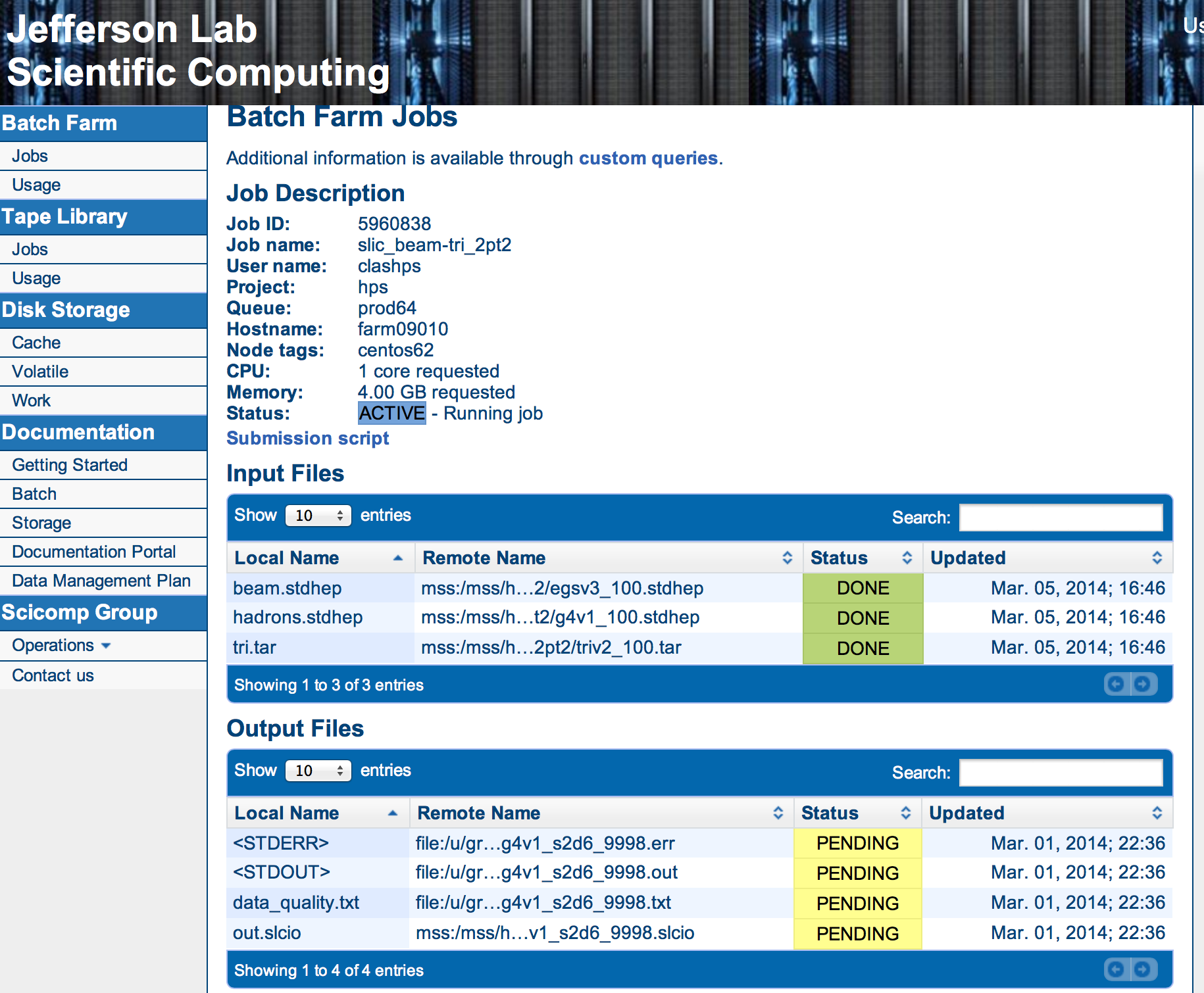

While here at jlab, I was told in passing that our jobs on the Jlab farm are taking up too much memory. The farm systems have 2 GB per core (I think?) and some of our jobs are asking for 4 GB. That means that one job is actually using up the resources for 2.

I am wondering if this is really a required foot print for our code, or whether there are ways to reduce the memory usage. For full out data processing of our real data, this could become a critical issue.

What is the memory footprint of our reconstruction code, and what does it depend on?

What causes it to be so large, can we reduce it?

For the current MDC, perhaps it would be possible to split the SLIC step, which takes little memory, from the reconstruction step which takes a lot. This would increase the throughput on the farm, since more nodes would be available to us.

Best,

Maurik

Use REPLY-ALL to reply to list

To unsubscribe from the HPS-SOFTWARE list, click the following link:

https://listserv.slac.stanford.edu/cgi-bin/wa?SUBED1=HPS-SOFTWARE&A=1